The digital world, captivated by the promises of the fourth industrial revolution led by artificial intelligence (AI), seems to overlook the environmental cost it incurs. Contrary to the enthusiastically waved green flag, drawbacks emerge concerning the rise of so-called AI, specifically in the intensive use of water in major companies’ data centers like Microsoft and Google.

Academic assessments on water consumption.

The study conducted by Shao-lei Ren from the University of California, soon to be published, has raised an alarm about the environmental sustainability of AI-dedicated data centers. The primary focus is on ChatGpt by OpenAI, a company owned by Microsoft, highlighting that for every session of 5-50 questions or suggestions, the algorithm “consumes” half a liter of water. This consumption varies significantly depending on the geographic location of the servers and climatic conditions.

The impressive figures provided by the companies themselves in their annual environmental reports highlight a boom in natural resource consumption. In 2022, Microsoft recorded a 34% increase in global water consumption, reaching 6.4 million cubic meters, mainly due to the increased computing capacity required for ChatGpt training. Google, equally involved in AI-related activities, reported a 20% increase in water consumption in the previous year.

Microsoft’s strategic choice to position three data centers in West Des Moines, Iowa, reveals further environmental considerations. The temperate climate reduces the need for cooling compared to other regions, but the cooling system draws significant amounts of water from aqueducts only during hot summers.

The issue of water intensity in data centers, despite being a known problem, remains surprisingly underdiscussed. Comparisons with situations like the 2020 case in Oregon, where Google’s data centers were accused of enormous water consumption, demonstrate that the problem is not limited to individual locations. Initiatives like Microsoft’s project to experiment with deposits on the seafloor highlight the search for innovative solutions to address the issue.

The development of “liquid cooling.”

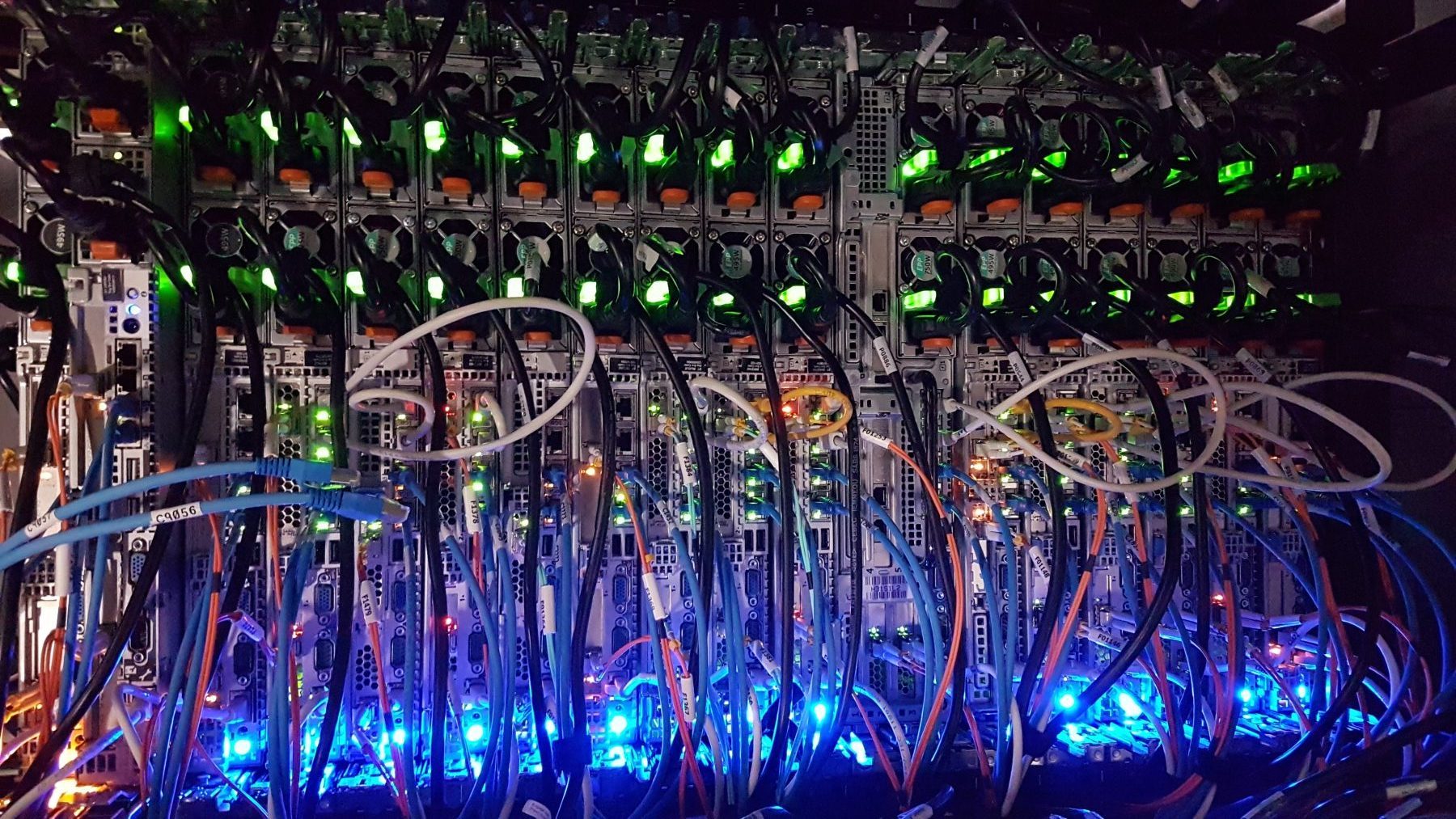

However, the hardware required to power these high-intensity computing applications is already surpassing the capabilities of traditional air cooling techniques. To prepare for this future, the data center industry is adopting liquid cooling, a system that uses liquids, often water, instead of air to dissipate heat more efficiently, thereby reducing energy consumption and required space.

Liquid cooling, initially a niche solution, is rapidly gaining ground globally. According to the recent report by the Imark Group research company, the liquid cooling market reached $2.9 billion in revenue in 2023, predicting an annual growth of 19.5%, reaching $15.3 billion by 2032.

Data centers, notoriously energy-intensive, significantly contribute to greenhouse gas emissions and global electricity consumption. Building new data centers or increasing the size of existing ones is no longer sustainable. The key to addressing the growing demand for computing capacity is optimizing performance, and liquid cooling could be an effective solution.

Liquid cooling offers several advantages, dissipating ten times more heat than air and consuming ten times less energy for the same level of cooling. This allows flexible choices between energy savings and increased power without having to increase the number of servers. Additionally, liquid cooling solutions enable significant space savings, four times greater than air cooling solutions.

However, integrating liquid cooling into existing data centers requires careful design and short-term investment.

In conclusion, enthusiasm for the digital and AI revolution must be tempered by awareness of environmental costs. As the digital realm advances to new horizons, it is essential to consider natural resources, especially water, as the new oil, and seek sustainable solutions to ensure a balanced future between technological innovation and environmental responsibility.